Project Snapshot

Deep Learning, Image Classification

Classify distracted driver behaviors

ResNet9

State Farm Distracted Driver Detection (Kaggle)

Validation Accuracy: 99.22%, F1 Score: 99.32%

Research Intern

IIT Hyderabad

Prof. P. Rajalakshmi

December 2023 - May 2024

Project Overview

The Challenge

Distracted driving is a significant contributor to road accidents globally. Effectively identifying and classifying these behaviors in real-time is crucial for enhancing road safety and developing preventative systems. This project addresses the challenge of accurately detecting various forms of driver distraction using computer vision techniques.

Our Solution

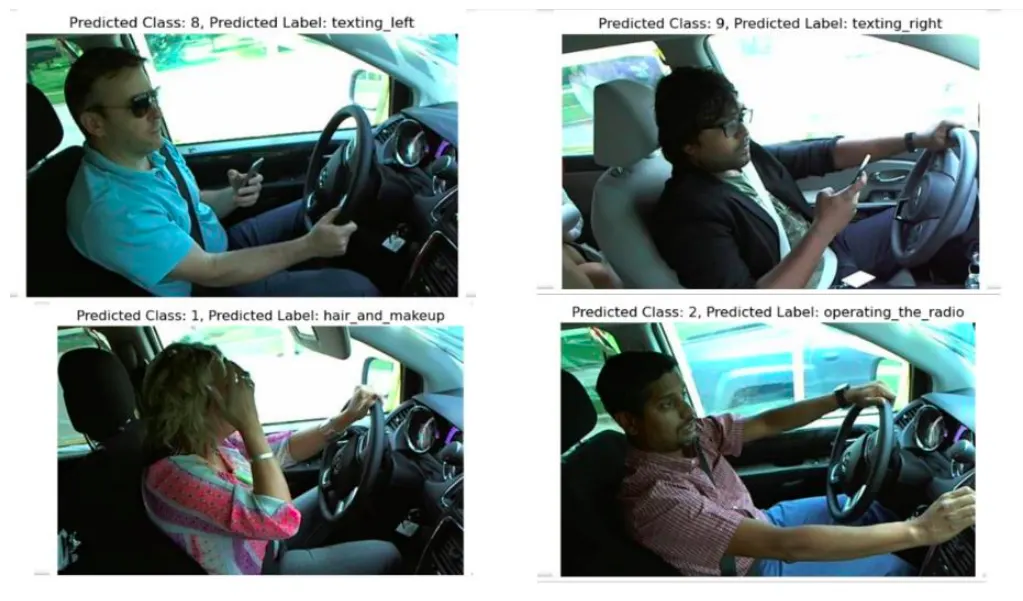

This project employs the ResNet9 deep learning architecture to classify ten distinct driver distraction classes from images. By leveraging the comprehensive State Farm Distracted Driver Detection dataset from Kaggle, and implementing robust image augmentation techniques, we aimed to develop a highly accurate and generalized model capable of contributing to safer driving environments.

Approach & Methodology

- Deep Learning with ResNet9: Utilized the lightweight yet powerful ResNet9 architecture for its efficiency and ability to handle complex image features through skip connections, mitigating vanishing gradient issues.

- Dataset & Preprocessing: Trained the model on the State Farm Distracted Driver Detection dataset, which comprises images across ten different driver behaviors (e.g., texting, talking on phone, safe driving).

- Image Augmentation: Employed a suite of image augmentation techniques including random rotation, cropping, and horizontal flipping. This significantly enhanced the model's robustness and generalization capabilities by exposing it to a wider variety of data.

- Comparative Analysis: Conducted a comparative study between ResNet9 and the more complex ResNet50. This demonstrated that ResNet9 achieves comparable high accuracy with a simpler architecture, making it suitable for deployment in resource-constrained environments.

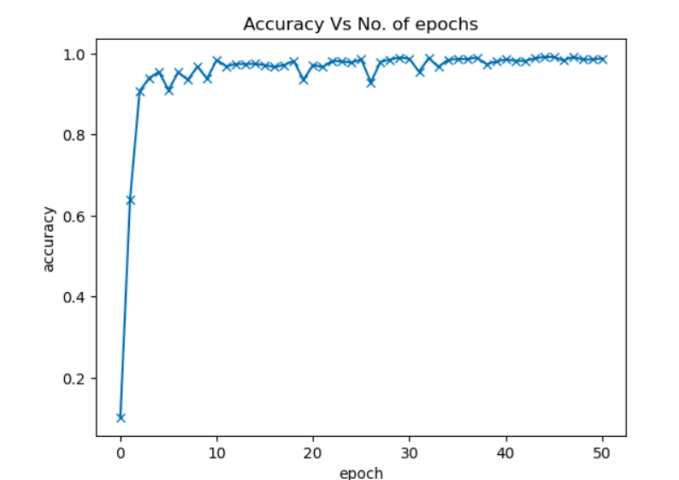

Key Results & Visualizations

Achieved Metrics:

Validation Accuracy: 99.22%

F1 Score: 99.32%

Technologies Used

Lessons Learned & Challenges

- Data Imbalance: Addressing potential class imbalance within the dataset was crucial for achieving high and balanced performance across all distraction categories.

- Overfitting Mitigation: Extensive use of image augmentation techniques proved vital in preventing overfitting, especially with a relatively smaller domain-specific dataset.

- Architectural Choice: Demonstrating that a simpler architecture like ResNet9 can achieve comparable state-of-the-art results to much larger models (like ResNet50) highlights the importance of selecting the right tool for the specific problem, balancing accuracy with computational efficiency.

- Impact of Skip Connections: Gained deeper understanding of how skip connections in ResNet effectively combat the vanishing gradient problem, enabling the training of deeper networks.

Future Goals

Future work includes integrating the ResNet9 model into real-time distracted driver detection systems for smart vehicles and exploring additional deep learning architectures to further optimize performance and computational efficiency. Additionally, exploring deployment on edge devices for in-car systems would be a valuable next step.